Constraining on parameters for Deep Learning¶

Standard gradient descent algorithm updates the parameters \(\theta_t\) of the loss function \(\texttt{loss}(\theta)\) as

where the expectation is approximated by evaluating the cost and gradient over the full training set. \(\eta\) is the learning rate parameter.

Stochastic Gradient Descent (SGD) simply does away with the expectation in the update and computes the gradient using only a single or a few training examples. The new update is given by,

with a pair \((x(i),y(i))\) from the training set.

Generally each parameter update in SGD is computed with respect to a few training examples or a minibatch.

The inclusion of constraints in the SGD is performed in Keras by means of Projected Gradient Descent. In this case, we simply choose the point nearest to \(\theta_{t+1}\) satisfying the constraint.

Example of constraint in keras:

Nonnegative

Norm One

import tensorflow

import numpy as np

import matplotlib.pyplot as plt

plt.rc('font', family='serif')

plt.rc('xtick', labelsize='x-small')

plt.rc('ytick', labelsize='x-small')

# Model / data parameters

num_classes = 10

input_shape = (28, 28, 1)

use_samples=1024

# the data, split between train and test sets

(x_train, y_train), (x_test, y_test) = tensorflow.keras.datasets.mnist.load_data()

x_train=x_train[0:use_samples]

y_train=y_train[0:use_samples]

# Scale images to the [0, 1] range

x_train = x_train.astype("float32") / 255

x_test = x_test.astype("float32") / 255

# Make sure images have shape (28, 28, 1)

x_train = np.expand_dims(x_train, -1)

x_test = np.expand_dims(x_test, -1)

print("x_train shape:", x_train.shape)

print(x_train.shape[0], "train samples")

print(x_test.shape[0], "test samples")

# convert class vectors to binary class matrices

y_train = tensorflow.keras.utils.to_categorical(y_train, num_classes)

y_test = tensorflow.keras.utils.to_categorical(y_test, num_classes)

x_train shape: (1024, 28, 28, 1)

1024 train samples

10000 test samples

from morpholayers import *

from morpholayers.layers import *

from morpholayers.constraints import *

from morpholayers.regularizers import *

from tensorflow.keras.layers import Input,Conv2D,MaxPooling2D,Flatten,Dropout,Dense

from tensorflow.keras.models import Model

batch_size = 128

epochs = 100

nfilterstolearn=8

filter_size=5

regularizer_parameter=.002

from sklearn.metrics import classification_report,confusion_matrix

def get_model(layer0):

xin=Input(shape=input_shape)

xlayer=layer0(xin)

x=MaxPooling2D(pool_size=(2, 2))(xlayer)

x=Conv2D(32, kernel_size=(3, 3), activation="relu")(x)

x=MaxPooling2D(pool_size=(2, 2))(x)

x=Flatten()(x)

x=Dropout(0.5)(x)

xoutput=Dense(num_classes, activation="softmax")(x)

return Model(xin,outputs=xoutput), Model(xin,outputs=xlayer)

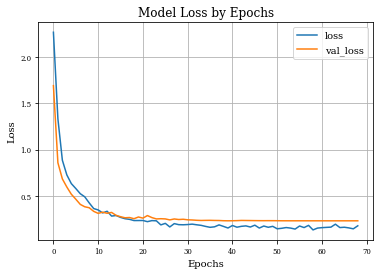

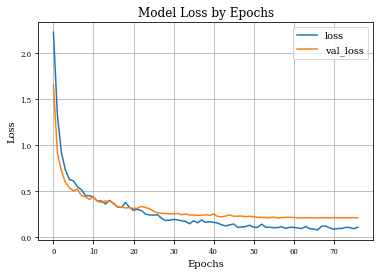

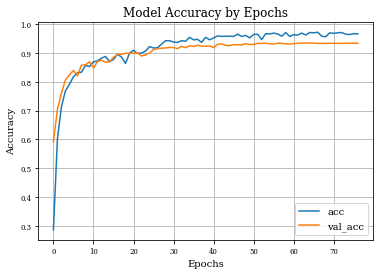

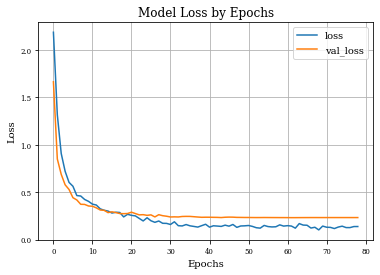

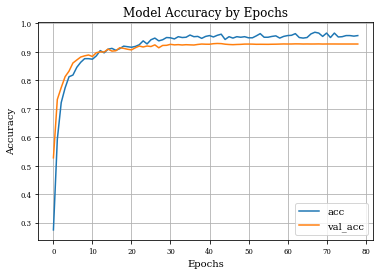

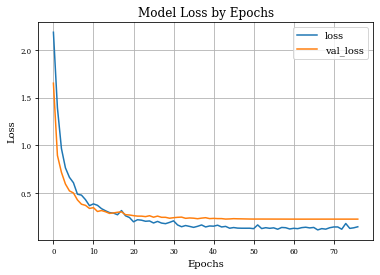

def plot_history(history):

plt.figure()

plt.plot(history.history['loss'],label='loss')

plt.plot(history.history['val_loss'],label='val_loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.title('Model Loss by Epochs')

plt.grid('on')

plt.legend()

plt.show()

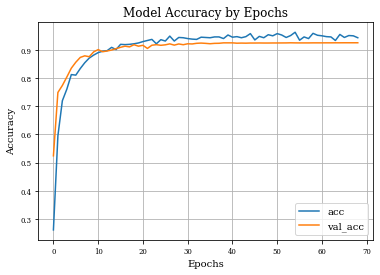

plt.plot(history.history['accuracy'],label='acc')

plt.plot(history.history['val_accuracy'],label='val_acc')

plt.grid('on')

plt.title('Model Accuracy by Epochs')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

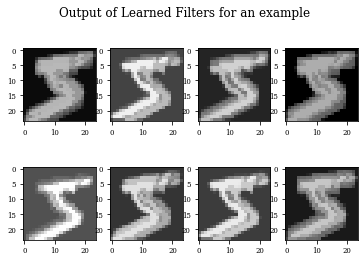

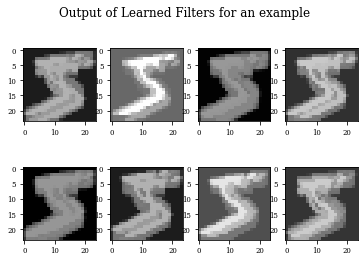

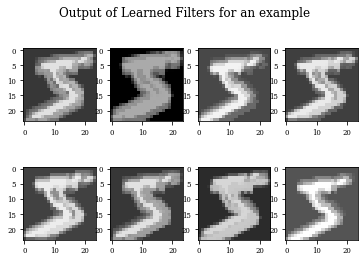

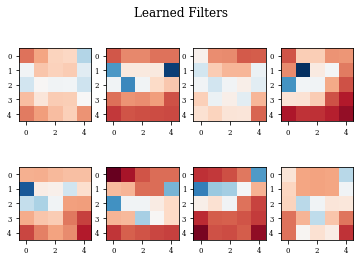

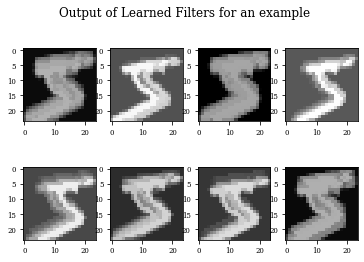

def plot_output_filters(model):

fig=plt.figure()

Z=model.predict(x_train[0:1,:,:,:])

for i in range(Z.shape[3]):

plt.subplot(2,Z.shape[3]/2,i+1)

plt.imshow(Z[0,:,:,i],cmap='gray',vmax=Z.max(),vmin=Z.min())

#plt.colorbar()

fig.suptitle('Output of Learned Filters for an example')

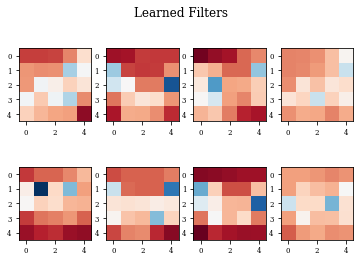

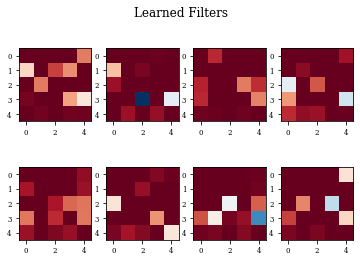

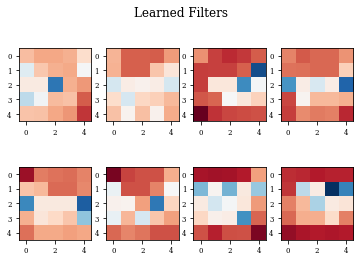

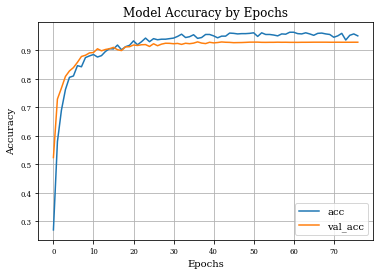

def plot_filters(model):

Z=model.layers[-1].get_weights()[0]

fig=plt.figure()

for i in range(Z.shape[3]):

plt.subplot(2,Z.shape[3]/2,i+1)

plt.imshow(Z[:,:,0,i],cmap='RdBu',vmax=Z.max(),vmin=Z.min())

fig.suptitle('Learned Filters')

def see_results_layer(layer,lr=.001):

modeli,modellayer=get_model(layer)

modeli.summary()

modeli.compile(loss="categorical_crossentropy", optimizer=tensorflow.keras.optimizers.Adam(lr=lr), metrics=["accuracy"])

historyi=modeli.fit(x_train, y_train, batch_size=batch_size, epochs=epochs, validation_data=(x_test,y_test),

callbacks=[tf.keras.callbacks.EarlyStopping(monitor='loss', patience=10,restore_best_weights=True),

tf.keras.callbacks.ReduceLROnPlateau(patience=3,factor=.5)],verbose=1)

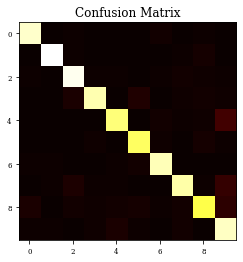

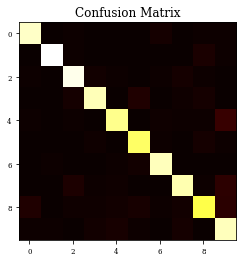

Y_test = np.argmax(y_test, axis=1) # Convert one-hot to index

y_pred = np.argmax(modeli.predict(x_test),axis=1)

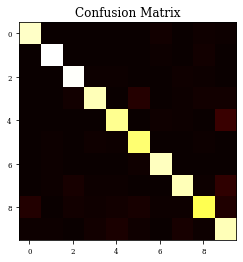

CM=confusion_matrix(Y_test, y_pred)

print(CM)

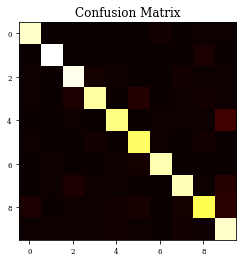

plt.imshow(CM,cmap='hot',vmin=0,vmax=1000)

plt.title('Confusion Matrix')

plt.show()

print(classification_report(Y_test, y_pred))

plot_history(historyi)

plot_filters(modellayer)

plot_output_filters(modellayer)

return historyi

Example of Dilation Layer¶

histDil=see_results_layer(Dilation2D(nfilterstolearn, padding='valid',kernel_size=(filter_size, filter_size)),lr=.01)

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 28, 28, 1)] 0

_________________________________________________________________

dilation2d (Dilation2D) (None, 24, 24, 8) 200

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 12, 12, 8) 0

_________________________________________________________________

conv2d (Conv2D) (None, 10, 10, 32) 2336

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 5, 5, 32) 0

_________________________________________________________________

flatten (Flatten) (None, 800) 0

_________________________________________________________________

dropout (Dropout) (None, 800) 0

_________________________________________________________________

dense (Dense) (None, 10) 8010

=================================================================

Total params: 10,546

Trainable params: 10,546

Non-trainable params: 0

_________________________________________________________________

Epoch 1/100

8/8 [==============================] - 2s 302ms/step - loss: 2.2667 - accuracy: 0.2607 - val_loss: 1.6904 - val_accuracy: 0.5240 - lr: 0.0100

Epoch 2/100

8/8 [==============================] - 2s 263ms/step - loss: 1.3328 - accuracy: 0.5947 - val_loss: 0.8580 - val_accuracy: 0.7490 - lr: 0.0100

Epoch 3/100

8/8 [==============================] - 2s 261ms/step - loss: 0.8852 - accuracy: 0.7197 - val_loss: 0.6837 - val_accuracy: 0.7739 - lr: 0.0100

Epoch 4/100

8/8 [==============================] - 2s 276ms/step - loss: 0.7266 - accuracy: 0.7607 - val_loss: 0.5960 - val_accuracy: 0.8039 - lr: 0.0100

Epoch 5/100

8/8 [==============================] - 2s 270ms/step - loss: 0.6331 - accuracy: 0.8125 - val_loss: 0.5185 - val_accuracy: 0.8352 - lr: 0.0100

Epoch 6/100

8/8 [==============================] - 2s 258ms/step - loss: 0.5799 - accuracy: 0.8105 - val_loss: 0.4648 - val_accuracy: 0.8564 - lr: 0.0100

Epoch 7/100

8/8 [==============================] - 2s 258ms/step - loss: 0.5239 - accuracy: 0.8340 - val_loss: 0.4087 - val_accuracy: 0.8735 - lr: 0.0100

Epoch 8/100

8/8 [==============================] - 2s 262ms/step - loss: 0.4906 - accuracy: 0.8545 - val_loss: 0.3832 - val_accuracy: 0.8791 - lr: 0.0100

Epoch 9/100

8/8 [==============================] - 2s 253ms/step - loss: 0.4243 - accuracy: 0.8711 - val_loss: 0.3724 - val_accuracy: 0.8760 - lr: 0.0100

Epoch 10/100

8/8 [==============================] - 2s 265ms/step - loss: 0.3638 - accuracy: 0.8818 - val_loss: 0.3336 - val_accuracy: 0.8932 - lr: 0.0100

Epoch 11/100

8/8 [==============================] - 2s 268ms/step - loss: 0.3486 - accuracy: 0.8906 - val_loss: 0.3119 - val_accuracy: 0.9012 - lr: 0.0100

Epoch 12/100

8/8 [==============================] - 2s 257ms/step - loss: 0.3167 - accuracy: 0.8955 - val_loss: 0.3248 - val_accuracy: 0.8937 - lr: 0.0100

Epoch 13/100

8/8 [==============================] - 2s 268ms/step - loss: 0.3346 - accuracy: 0.8975 - val_loss: 0.3129 - val_accuracy: 0.8963 - lr: 0.0100

Epoch 14/100

8/8 [==============================] - 2s 249ms/step - loss: 0.2823 - accuracy: 0.9092 - val_loss: 0.3220 - val_accuracy: 0.8997 - lr: 0.0100

Epoch 15/100

8/8 [==============================] - 2s 249ms/step - loss: 0.2889 - accuracy: 0.9014 - val_loss: 0.2904 - val_accuracy: 0.9046 - lr: 0.0050

Epoch 16/100

8/8 [==============================] - 2s 250ms/step - loss: 0.2685 - accuracy: 0.9199 - val_loss: 0.2758 - val_accuracy: 0.9094 - lr: 0.0050

Epoch 17/100

8/8 [==============================] - 2s 288ms/step - loss: 0.2541 - accuracy: 0.9189 - val_loss: 0.2643 - val_accuracy: 0.9134 - lr: 0.0050

Epoch 18/100

8/8 [==============================] - 2s 283ms/step - loss: 0.2474 - accuracy: 0.9199 - val_loss: 0.2669 - val_accuracy: 0.9109 - lr: 0.0050

Epoch 19/100

8/8 [==============================] - 2s 266ms/step - loss: 0.2342 - accuracy: 0.9219 - val_loss: 0.2543 - val_accuracy: 0.9186 - lr: 0.0050

Epoch 20/100

8/8 [==============================] - 2s 273ms/step - loss: 0.2345 - accuracy: 0.9248 - val_loss: 0.2727 - val_accuracy: 0.9134 - lr: 0.0050

Epoch 21/100

8/8 [==============================] - 2s 266ms/step - loss: 0.2349 - accuracy: 0.9297 - val_loss: 0.2605 - val_accuracy: 0.9165 - lr: 0.0050

Epoch 22/100

8/8 [==============================] - 2s 270ms/step - loss: 0.2224 - accuracy: 0.9336 - val_loss: 0.2882 - val_accuracy: 0.9054 - lr: 0.0050

Epoch 23/100

8/8 [==============================] - 2s 261ms/step - loss: 0.2336 - accuracy: 0.9375 - val_loss: 0.2662 - val_accuracy: 0.9168 - lr: 0.0025

Epoch 24/100

8/8 [==============================] - 2s 255ms/step - loss: 0.2316 - accuracy: 0.9219 - val_loss: 0.2519 - val_accuracy: 0.9187 - lr: 0.0025

Epoch 25/100

8/8 [==============================] - 2s 247ms/step - loss: 0.1873 - accuracy: 0.9365 - val_loss: 0.2541 - val_accuracy: 0.9165 - lr: 0.0025

Epoch 26/100

8/8 [==============================] - 2s 250ms/step - loss: 0.2040 - accuracy: 0.9316 - val_loss: 0.2519 - val_accuracy: 0.9182 - lr: 0.0025

Epoch 27/100

8/8 [==============================] - 2s 250ms/step - loss: 0.1653 - accuracy: 0.9492 - val_loss: 0.2413 - val_accuracy: 0.9216 - lr: 0.0025

Epoch 28/100

8/8 [==============================] - 2s 251ms/step - loss: 0.2012 - accuracy: 0.9316 - val_loss: 0.2515 - val_accuracy: 0.9171 - lr: 0.0025

Epoch 29/100

8/8 [==============================] - 2s 244ms/step - loss: 0.1900 - accuracy: 0.9443 - val_loss: 0.2455 - val_accuracy: 0.9213 - lr: 0.0025

Epoch 30/100

8/8 [==============================] - 2s 247ms/step - loss: 0.1887 - accuracy: 0.9434 - val_loss: 0.2483 - val_accuracy: 0.9186 - lr: 0.0025

Epoch 31/100

8/8 [==============================] - 2s 246ms/step - loss: 0.1912 - accuracy: 0.9404 - val_loss: 0.2422 - val_accuracy: 0.9219 - lr: 0.0012

Epoch 32/100

8/8 [==============================] - 2s 247ms/step - loss: 0.1956 - accuracy: 0.9385 - val_loss: 0.2403 - val_accuracy: 0.9213 - lr: 0.0012

Epoch 33/100

8/8 [==============================] - 2s 251ms/step - loss: 0.1887 - accuracy: 0.9375 - val_loss: 0.2371 - val_accuracy: 0.9236 - lr: 0.0012

Epoch 34/100

8/8 [==============================] - 2s 259ms/step - loss: 0.1825 - accuracy: 0.9453 - val_loss: 0.2353 - val_accuracy: 0.9244 - lr: 0.0012

Epoch 35/100

8/8 [==============================] - 2s 253ms/step - loss: 0.1711 - accuracy: 0.9443 - val_loss: 0.2362 - val_accuracy: 0.9234 - lr: 0.0012

Epoch 36/100

8/8 [==============================] - 2s 253ms/step - loss: 0.1613 - accuracy: 0.9434 - val_loss: 0.2367 - val_accuracy: 0.9221 - lr: 0.0012

Epoch 37/100

8/8 [==============================] - 2s 249ms/step - loss: 0.1667 - accuracy: 0.9463 - val_loss: 0.2354 - val_accuracy: 0.9234 - lr: 0.0012

Epoch 38/100

8/8 [==============================] - 2s 245ms/step - loss: 0.1874 - accuracy: 0.9463 - val_loss: 0.2348 - val_accuracy: 0.9237 - lr: 6.2500e-04

Epoch 39/100

8/8 [==============================] - 2s 260ms/step - loss: 0.1712 - accuracy: 0.9404 - val_loss: 0.2324 - val_accuracy: 0.9252 - lr: 6.2500e-04

Epoch 40/100

8/8 [==============================] - 2s 249ms/step - loss: 0.1539 - accuracy: 0.9531 - val_loss: 0.2317 - val_accuracy: 0.9252 - lr: 6.2500e-04

Epoch 41/100

8/8 [==============================] - 2s 258ms/step - loss: 0.1811 - accuracy: 0.9453 - val_loss: 0.2322 - val_accuracy: 0.9252 - lr: 6.2500e-04

Epoch 42/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1626 - accuracy: 0.9473 - val_loss: 0.2334 - val_accuracy: 0.9240 - lr: 6.2500e-04

Epoch 43/100

8/8 [==============================] - 2s 257ms/step - loss: 0.1720 - accuracy: 0.9434 - val_loss: 0.2355 - val_accuracy: 0.9244 - lr: 6.2500e-04

Epoch 44/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1766 - accuracy: 0.9473 - val_loss: 0.2349 - val_accuracy: 0.9241 - lr: 3.1250e-04

Epoch 45/100

8/8 [==============================] - 2s 257ms/step - loss: 0.1645 - accuracy: 0.9580 - val_loss: 0.2343 - val_accuracy: 0.9246 - lr: 3.1250e-04

Epoch 46/100

8/8 [==============================] - 2s 247ms/step - loss: 0.1844 - accuracy: 0.9355 - val_loss: 0.2336 - val_accuracy: 0.9246 - lr: 3.1250e-04

Epoch 47/100

8/8 [==============================] - 2s 246ms/step - loss: 0.1534 - accuracy: 0.9482 - val_loss: 0.2331 - val_accuracy: 0.9248 - lr: 1.5625e-04

Epoch 48/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1752 - accuracy: 0.9434 - val_loss: 0.2329 - val_accuracy: 0.9246 - lr: 1.5625e-04

Epoch 49/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1617 - accuracy: 0.9541 - val_loss: 0.2331 - val_accuracy: 0.9246 - lr: 1.5625e-04

Epoch 50/100

8/8 [==============================] - 2s 246ms/step - loss: 0.1718 - accuracy: 0.9502 - val_loss: 0.2329 - val_accuracy: 0.9248 - lr: 7.8125e-05

Epoch 51/100

8/8 [==============================] - 2s 251ms/step - loss: 0.1453 - accuracy: 0.9580 - val_loss: 0.2325 - val_accuracy: 0.9248 - lr: 7.8125e-05

Epoch 52/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1508 - accuracy: 0.9531 - val_loss: 0.2319 - val_accuracy: 0.9249 - lr: 7.8125e-05

Epoch 53/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1587 - accuracy: 0.9443 - val_loss: 0.2318 - val_accuracy: 0.9250 - lr: 3.9062e-05

Epoch 54/100

8/8 [==============================] - 2s 251ms/step - loss: 0.1529 - accuracy: 0.9512 - val_loss: 0.2317 - val_accuracy: 0.9254 - lr: 3.9062e-05

Epoch 55/100

8/8 [==============================] - 2s 249ms/step - loss: 0.1423 - accuracy: 0.9629 - val_loss: 0.2317 - val_accuracy: 0.9251 - lr: 3.9062e-05

Epoch 56/100

8/8 [==============================] - 2s 251ms/step - loss: 0.1756 - accuracy: 0.9346 - val_loss: 0.2317 - val_accuracy: 0.9250 - lr: 1.9531e-05

Epoch 57/100

8/8 [==============================] - 2s 253ms/step - loss: 0.1585 - accuracy: 0.9463 - val_loss: 0.2317 - val_accuracy: 0.9250 - lr: 1.9531e-05

Epoch 58/100

8/8 [==============================] - 2s 248ms/step - loss: 0.1822 - accuracy: 0.9404 - val_loss: 0.2317 - val_accuracy: 0.9250 - lr: 1.9531e-05

Epoch 59/100

8/8 [==============================] - 2s 253ms/step - loss: 0.1333 - accuracy: 0.9590 - val_loss: 0.2316 - val_accuracy: 0.9252 - lr: 9.7656e-06

Epoch 60/100

8/8 [==============================] - 2s 254ms/step - loss: 0.1531 - accuracy: 0.9521 - val_loss: 0.2316 - val_accuracy: 0.9252 - lr: 9.7656e-06

Epoch 61/100

8/8 [==============================] - 2s 268ms/step - loss: 0.1577 - accuracy: 0.9502 - val_loss: 0.2316 - val_accuracy: 0.9252 - lr: 9.7656e-06

Epoch 62/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1607 - accuracy: 0.9473 - val_loss: 0.2316 - val_accuracy: 0.9253 - lr: 9.7656e-06

Epoch 63/100

8/8 [==============================] - 2s 258ms/step - loss: 0.1632 - accuracy: 0.9463 - val_loss: 0.2316 - val_accuracy: 0.9253 - lr: 9.7656e-06

Epoch 64/100

8/8 [==============================] - 2s 283ms/step - loss: 0.1951 - accuracy: 0.9336 - val_loss: 0.2316 - val_accuracy: 0.9254 - lr: 4.8828e-06

Epoch 65/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1586 - accuracy: 0.9551 - val_loss: 0.2316 - val_accuracy: 0.9254 - lr: 4.8828e-06

Epoch 66/100

8/8 [==============================] - 2s 258ms/step - loss: 0.1622 - accuracy: 0.9443 - val_loss: 0.2316 - val_accuracy: 0.9255 - lr: 4.8828e-06

Epoch 67/100

8/8 [==============================] - 2s 249ms/step - loss: 0.1551 - accuracy: 0.9512 - val_loss: 0.2316 - val_accuracy: 0.9255 - lr: 2.4414e-06

Epoch 68/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1450 - accuracy: 0.9502 - val_loss: 0.2315 - val_accuracy: 0.9255 - lr: 2.4414e-06

Epoch 69/100

8/8 [==============================] - 2s 256ms/step - loss: 0.1777 - accuracy: 0.9434 - val_loss: 0.2316 - val_accuracy: 0.9255 - lr: 2.4414e-06

[[ 946 0 6 1 0 3 13 2 6 3]

[ 0 1102 5 1 1 1 3 4 16 2]

[ 5 0 983 7 6 1 4 14 8 4]

[ 0 0 25 918 1 34 1 8 14 9]

[ 2 1 3 1 864 0 12 6 9 84]

[ 1 2 1 11 3 840 8 1 19 6]

[ 4 5 1 0 7 12 926 0 3 0]

[ 0 4 29 7 4 0 0 914 6 64]

[ 25 2 12 11 12 17 7 14 820 54]

[ 5 4 2 9 25 7 1 14 3 939]]

precision recall f1-score support

0 0.96 0.97 0.96 980

1 0.98 0.97 0.98 1135

2 0.92 0.95 0.94 1032

3 0.95 0.91 0.93 1010

4 0.94 0.88 0.91 982

5 0.92 0.94 0.93 892

6 0.95 0.97 0.96 958

7 0.94 0.89 0.91 1028

8 0.91 0.84 0.87 974

9 0.81 0.93 0.86 1009

accuracy 0.93 10000

macro avg 0.93 0.92 0.92 10000

weighted avg 0.93 0.93 0.93 10000

Example of Dilation Layer with Nonnegative Constraint¶

histDilnonneg=see_results_layer(Dilation2D(nfilterstolearn, padding='valid',kernel_size=(filter_size, filter_size),kernel_constraint= tf.keras.constraints.non_neg()),lr=.01)

Model: "model_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 28, 28, 1)] 0

_________________________________________________________________

dilation2d_1 (Dilation2D) (None, 24, 24, 8) 200

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 12, 12, 8) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 10, 10, 32) 2336

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 5, 5, 32) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 800) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 800) 0

_________________________________________________________________

dense_1 (Dense) (None, 10) 8010

=================================================================

Total params: 10,546

Trainable params: 10,546

Non-trainable params: 0

_________________________________________________________________

Epoch 1/100

8/8 [==============================] - 2s 277ms/step - loss: 2.2290 - accuracy: 0.2861 - val_loss: 1.6591 - val_accuracy: 0.5920 - lr: 0.0100

Epoch 2/100

8/8 [==============================] - 2s 250ms/step - loss: 1.3239 - accuracy: 0.6016 - val_loss: 0.9148 - val_accuracy: 0.7036 - lr: 0.0100

Epoch 3/100

8/8 [==============================] - 2s 265ms/step - loss: 0.9202 - accuracy: 0.7129 - val_loss: 0.7220 - val_accuracy: 0.7603 - lr: 0.0100

Epoch 4/100

8/8 [==============================] - 2s 265ms/step - loss: 0.7307 - accuracy: 0.7686 - val_loss: 0.5971 - val_accuracy: 0.8064 - lr: 0.0100

Epoch 5/100

8/8 [==============================] - 2s 262ms/step - loss: 0.6285 - accuracy: 0.7910 - val_loss: 0.5380 - val_accuracy: 0.8236 - lr: 0.0100

Epoch 6/100

8/8 [==============================] - 2s 279ms/step - loss: 0.6135 - accuracy: 0.8174 - val_loss: 0.5059 - val_accuracy: 0.8393 - lr: 0.0100

Epoch 7/100

8/8 [==============================] - 2s 268ms/step - loss: 0.5504 - accuracy: 0.8311 - val_loss: 0.5236 - val_accuracy: 0.8202 - lr: 0.0100

Epoch 8/100

8/8 [==============================] - 2s 255ms/step - loss: 0.5178 - accuracy: 0.8330 - val_loss: 0.4536 - val_accuracy: 0.8575 - lr: 0.0100

Epoch 9/100

8/8 [==============================] - 2s 256ms/step - loss: 0.4527 - accuracy: 0.8564 - val_loss: 0.4400 - val_accuracy: 0.8583 - lr: 0.0100

Epoch 10/100

8/8 [==============================] - 2s 257ms/step - loss: 0.4528 - accuracy: 0.8525 - val_loss: 0.4127 - val_accuracy: 0.8694 - lr: 0.0100

Epoch 11/100

8/8 [==============================] - 2s 250ms/step - loss: 0.4358 - accuracy: 0.8691 - val_loss: 0.4406 - val_accuracy: 0.8477 - lr: 0.0100

Epoch 12/100

8/8 [==============================] - 2s 248ms/step - loss: 0.3937 - accuracy: 0.8721 - val_loss: 0.3901 - val_accuracy: 0.8701 - lr: 0.0100

Epoch 13/100

8/8 [==============================] - 2s 260ms/step - loss: 0.3958 - accuracy: 0.8818 - val_loss: 0.3804 - val_accuracy: 0.8746 - lr: 0.0100

Epoch 14/100

8/8 [==============================] - 2s 249ms/step - loss: 0.3622 - accuracy: 0.8877 - val_loss: 0.3951 - val_accuracy: 0.8678 - lr: 0.0100

Epoch 15/100

8/8 [==============================] - 2s 248ms/step - loss: 0.4017 - accuracy: 0.8701 - val_loss: 0.3905 - val_accuracy: 0.8692 - lr: 0.0100

Epoch 16/100

8/8 [==============================] - 2s 267ms/step - loss: 0.3665 - accuracy: 0.8770 - val_loss: 0.3735 - val_accuracy: 0.8848 - lr: 0.0100

Epoch 17/100

8/8 [==============================] - 2s 251ms/step - loss: 0.3317 - accuracy: 0.8955 - val_loss: 0.3278 - val_accuracy: 0.8949 - lr: 0.0100

Epoch 18/100

8/8 [==============================] - 2s 275ms/step - loss: 0.3239 - accuracy: 0.8857 - val_loss: 0.3283 - val_accuracy: 0.8948 - lr: 0.0100

Epoch 19/100

8/8 [==============================] - 2s 251ms/step - loss: 0.3785 - accuracy: 0.8633 - val_loss: 0.3191 - val_accuracy: 0.8989 - lr: 0.0100

Epoch 20/100

8/8 [==============================] - 2s 270ms/step - loss: 0.3257 - accuracy: 0.8994 - val_loss: 0.3229 - val_accuracy: 0.8983 - lr: 0.0100

Epoch 21/100

8/8 [==============================] - 2s 249ms/step - loss: 0.2940 - accuracy: 0.9092 - val_loss: 0.3137 - val_accuracy: 0.8995 - lr: 0.0100

Epoch 22/100

8/8 [==============================] - 2s 255ms/step - loss: 0.3045 - accuracy: 0.8984 - val_loss: 0.3159 - val_accuracy: 0.9004 - lr: 0.0100

Epoch 23/100

8/8 [==============================] - 2s 259ms/step - loss: 0.2901 - accuracy: 0.8994 - val_loss: 0.3353 - val_accuracy: 0.8892 - lr: 0.0100

Epoch 24/100

8/8 [==============================] - 2s 264ms/step - loss: 0.2519 - accuracy: 0.9062 - val_loss: 0.3250 - val_accuracy: 0.8936 - lr: 0.0100

Epoch 25/100

8/8 [==============================] - 2s 250ms/step - loss: 0.2435 - accuracy: 0.9219 - val_loss: 0.3088 - val_accuracy: 0.8991 - lr: 0.0050

Epoch 26/100

8/8 [==============================] - 2s 254ms/step - loss: 0.2411 - accuracy: 0.9170 - val_loss: 0.2837 - val_accuracy: 0.9117 - lr: 0.0050

Epoch 27/100

8/8 [==============================] - 2s 247ms/step - loss: 0.2463 - accuracy: 0.9180 - val_loss: 0.2662 - val_accuracy: 0.9146 - lr: 0.0050

Epoch 28/100

8/8 [==============================] - 2s 253ms/step - loss: 0.2067 - accuracy: 0.9307 - val_loss: 0.2611 - val_accuracy: 0.9165 - lr: 0.0050

Epoch 29/100

8/8 [==============================] - 2s 254ms/step - loss: 0.1827 - accuracy: 0.9424 - val_loss: 0.2602 - val_accuracy: 0.9171 - lr: 0.0050

Epoch 30/100

8/8 [==============================] - 2s 270ms/step - loss: 0.1863 - accuracy: 0.9424 - val_loss: 0.2546 - val_accuracy: 0.9192 - lr: 0.0050

Epoch 31/100

8/8 [==============================] - 2s 267ms/step - loss: 0.1950 - accuracy: 0.9375 - val_loss: 0.2554 - val_accuracy: 0.9186 - lr: 0.0050

Epoch 32/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1898 - accuracy: 0.9365 - val_loss: 0.2597 - val_accuracy: 0.9149 - lr: 0.0050

Epoch 33/100

8/8 [==============================] - 2s 247ms/step - loss: 0.1799 - accuracy: 0.9424 - val_loss: 0.2449 - val_accuracy: 0.9232 - lr: 0.0050

Epoch 34/100

8/8 [==============================] - 2s 251ms/step - loss: 0.1732 - accuracy: 0.9404 - val_loss: 0.2535 - val_accuracy: 0.9182 - lr: 0.0050

Epoch 35/100

8/8 [==============================] - 2s 282ms/step - loss: 0.1478 - accuracy: 0.9541 - val_loss: 0.2416 - val_accuracy: 0.9245 - lr: 0.0050

Epoch 36/100

8/8 [==============================] - 2s 258ms/step - loss: 0.1792 - accuracy: 0.9453 - val_loss: 0.2431 - val_accuracy: 0.9226 - lr: 0.0050

Epoch 37/100

8/8 [==============================] - 2s 250ms/step - loss: 0.1579 - accuracy: 0.9473 - val_loss: 0.2380 - val_accuracy: 0.9267 - lr: 0.0050

Epoch 38/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1890 - accuracy: 0.9365 - val_loss: 0.2405 - val_accuracy: 0.9238 - lr: 0.0050

Epoch 39/100

8/8 [==============================] - 2s 246ms/step - loss: 0.1627 - accuracy: 0.9541 - val_loss: 0.2452 - val_accuracy: 0.9231 - lr: 0.0050

Epoch 40/100

8/8 [==============================] - 2s 246ms/step - loss: 0.1701 - accuracy: 0.9453 - val_loss: 0.2372 - val_accuracy: 0.9238 - lr: 0.0050

Epoch 41/100

8/8 [==============================] - 2s 259ms/step - loss: 0.1627 - accuracy: 0.9521 - val_loss: 0.2553 - val_accuracy: 0.9185 - lr: 0.0050

Epoch 42/100

8/8 [==============================] - 2s 268ms/step - loss: 0.1551 - accuracy: 0.9590 - val_loss: 0.2269 - val_accuracy: 0.9298 - lr: 0.0050

Epoch 43/100

8/8 [==============================] - 2s 248ms/step - loss: 0.1361 - accuracy: 0.9570 - val_loss: 0.2232 - val_accuracy: 0.9314 - lr: 0.0050

Epoch 44/100

8/8 [==============================] - 2s 263ms/step - loss: 0.1240 - accuracy: 0.9580 - val_loss: 0.2317 - val_accuracy: 0.9264 - lr: 0.0050

Epoch 45/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1345 - accuracy: 0.9580 - val_loss: 0.2432 - val_accuracy: 0.9252 - lr: 0.0050

Epoch 46/100

8/8 [==============================] - 2s 274ms/step - loss: 0.1456 - accuracy: 0.9570 - val_loss: 0.2283 - val_accuracy: 0.9288 - lr: 0.0050

Epoch 47/100

8/8 [==============================] - 2s 262ms/step - loss: 0.1087 - accuracy: 0.9648 - val_loss: 0.2303 - val_accuracy: 0.9281 - lr: 0.0025

Epoch 48/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1114 - accuracy: 0.9570 - val_loss: 0.2310 - val_accuracy: 0.9278 - lr: 0.0025

Epoch 49/100

8/8 [==============================] - 2s 253ms/step - loss: 0.1176 - accuracy: 0.9609 - val_loss: 0.2243 - val_accuracy: 0.9316 - lr: 0.0025

Epoch 50/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1318 - accuracy: 0.9521 - val_loss: 0.2287 - val_accuracy: 0.9293 - lr: 0.0012

Epoch 51/100

8/8 [==============================] - 2s 263ms/step - loss: 0.1090 - accuracy: 0.9639 - val_loss: 0.2235 - val_accuracy: 0.9294 - lr: 0.0012

Epoch 52/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1085 - accuracy: 0.9648 - val_loss: 0.2169 - val_accuracy: 0.9331 - lr: 0.0012

Epoch 53/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1434 - accuracy: 0.9463 - val_loss: 0.2167 - val_accuracy: 0.9330 - lr: 0.0012

Epoch 54/100

8/8 [==============================] - 2s 259ms/step - loss: 0.1100 - accuracy: 0.9668 - val_loss: 0.2147 - val_accuracy: 0.9339 - lr: 0.0012

Epoch 55/100

8/8 [==============================] - 2s 261ms/step - loss: 0.1092 - accuracy: 0.9658 - val_loss: 0.2141 - val_accuracy: 0.9314 - lr: 0.0012

Epoch 56/100

8/8 [==============================] - 2s 256ms/step - loss: 0.1050 - accuracy: 0.9688 - val_loss: 0.2189 - val_accuracy: 0.9304 - lr: 0.0012

Epoch 57/100

8/8 [==============================] - 2s 268ms/step - loss: 0.1060 - accuracy: 0.9658 - val_loss: 0.2113 - val_accuracy: 0.9339 - lr: 0.0012

Epoch 58/100

8/8 [==============================] - 2s 281ms/step - loss: 0.1146 - accuracy: 0.9580 - val_loss: 0.2148 - val_accuracy: 0.9333 - lr: 0.0012

Epoch 59/100

8/8 [==============================] - 2s 277ms/step - loss: 0.0971 - accuracy: 0.9707 - val_loss: 0.2173 - val_accuracy: 0.9310 - lr: 0.0012

Epoch 60/100

8/8 [==============================] - 2s 270ms/step - loss: 0.1093 - accuracy: 0.9570 - val_loss: 0.2172 - val_accuracy: 0.9309 - lr: 0.0012

Epoch 61/100

8/8 [==============================] - 2s 280ms/step - loss: 0.1081 - accuracy: 0.9629 - val_loss: 0.2152 - val_accuracy: 0.9320 - lr: 6.2500e-04

Epoch 62/100

8/8 [==============================] - 2s 272ms/step - loss: 0.1016 - accuracy: 0.9619 - val_loss: 0.2119 - val_accuracy: 0.9335 - lr: 6.2500e-04

Epoch 63/100

8/8 [==============================] - 2s 281ms/step - loss: 0.0956 - accuracy: 0.9688 - val_loss: 0.2106 - val_accuracy: 0.9343 - lr: 6.2500e-04

Epoch 64/100

8/8 [==============================] - 2s 269ms/step - loss: 0.1169 - accuracy: 0.9619 - val_loss: 0.2119 - val_accuracy: 0.9341 - lr: 6.2500e-04

Epoch 65/100

8/8 [==============================] - 2s 266ms/step - loss: 0.0939 - accuracy: 0.9707 - val_loss: 0.2132 - val_accuracy: 0.9342 - lr: 6.2500e-04

Epoch 66/100

8/8 [==============================] - 2s 264ms/step - loss: 0.0897 - accuracy: 0.9697 - val_loss: 0.2115 - val_accuracy: 0.9337 - lr: 6.2500e-04

Epoch 67/100

8/8 [==============================] - 2s 272ms/step - loss: 0.0805 - accuracy: 0.9717 - val_loss: 0.2125 - val_accuracy: 0.9331 - lr: 3.1250e-04

Epoch 68/100

8/8 [==============================] - 2s 269ms/step - loss: 0.1207 - accuracy: 0.9580 - val_loss: 0.2131 - val_accuracy: 0.9329 - lr: 3.1250e-04

Epoch 69/100

8/8 [==============================] - 2s 254ms/step - loss: 0.1217 - accuracy: 0.9561 - val_loss: 0.2128 - val_accuracy: 0.9329 - lr: 3.1250e-04

Epoch 70/100

8/8 [==============================] - 2s 240ms/step - loss: 0.1024 - accuracy: 0.9697 - val_loss: 0.2126 - val_accuracy: 0.9332 - lr: 1.5625e-04

Epoch 71/100

8/8 [==============================] - 2s 299ms/step - loss: 0.0880 - accuracy: 0.9678 - val_loss: 0.2127 - val_accuracy: 0.9333 - lr: 1.5625e-04

Epoch 72/100

8/8 [==============================] - 3s 349ms/step - loss: 0.0958 - accuracy: 0.9697 - val_loss: 0.2128 - val_accuracy: 0.9332 - lr: 1.5625e-04

Epoch 73/100

8/8 [==============================] - 3s 431ms/step - loss: 0.0957 - accuracy: 0.9707 - val_loss: 0.2129 - val_accuracy: 0.9332 - lr: 7.8125e-05

Epoch 74/100

8/8 [==============================] - 3s 421ms/step - loss: 0.1081 - accuracy: 0.9648 - val_loss: 0.2129 - val_accuracy: 0.9335 - lr: 7.8125e-05

Epoch 75/100

8/8 [==============================] - 3s 420ms/step - loss: 0.1061 - accuracy: 0.9639 - val_loss: 0.2131 - val_accuracy: 0.9339 - lr: 7.8125e-05

Epoch 76/100

8/8 [==============================] - 3s 414ms/step - loss: 0.0938 - accuracy: 0.9668 - val_loss: 0.2129 - val_accuracy: 0.9338 - lr: 3.9062e-05

Epoch 77/100

8/8 [==============================] - 3s 390ms/step - loss: 0.1092 - accuracy: 0.9658 - val_loss: 0.2129 - val_accuracy: 0.9337 - lr: 3.9062e-05

[[ 943 0 2 0 2 2 15 1 8 7]

[ 1 1105 3 2 2 1 4 2 14 1]

[ 2 1 995 7 6 2 2 10 5 2]

[ 0 2 14 926 0 40 0 4 12 12]

[ 3 0 3 0 888 0 11 4 2 71]

[ 1 4 1 11 4 858 3 0 7 3]

[ 1 7 1 0 3 8 935 0 3 0]

[ 0 4 21 6 7 1 0 928 5 56]

[ 39 3 13 8 15 23 5 7 827 34]

[ 6 4 2 12 25 9 0 21 4 926]]

precision recall f1-score support

0 0.95 0.96 0.95 980

1 0.98 0.97 0.98 1135

2 0.94 0.96 0.95 1032

3 0.95 0.92 0.93 1010

4 0.93 0.90 0.92 982

5 0.91 0.96 0.93 892

6 0.96 0.98 0.97 958

7 0.95 0.90 0.93 1028

8 0.93 0.85 0.89 974

9 0.83 0.92 0.87 1009

accuracy 0.93 10000

macro avg 0.93 0.93 0.93 10000

weighted avg 0.93 0.93 0.93 10000

histDilnonpos=see_results_layer(Dilation2D(nfilterstolearn, padding='valid',kernel_size=(filter_size, filter_size),kernel_constraint=NonPositive()),lr=.01)

Model: "model_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_3 (InputLayer) [(None, 28, 28, 1)] 0

_________________________________________________________________

dilation2d_2 (Dilation2D) (None, 24, 24, 8) 200

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 12, 12, 8) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 10, 10, 32) 2336

_________________________________________________________________

max_pooling2d_5 (MaxPooling2 (None, 5, 5, 32) 0

_________________________________________________________________

flatten_2 (Flatten) (None, 800) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 800) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 8010

=================================================================

Total params: 10,546

Trainable params: 10,546

Non-trainable params: 0

_________________________________________________________________

Epoch 1/100

8/8 [==============================] - 3s 399ms/step - loss: 2.1887 - accuracy: 0.2744 - val_loss: 1.6655 - val_accuracy: 0.5277 - lr: 0.0100

Epoch 2/100

8/8 [==============================] - 3s 403ms/step - loss: 1.3172 - accuracy: 0.5928 - val_loss: 0.8504 - val_accuracy: 0.7326 - lr: 0.0100

Epoch 3/100

8/8 [==============================] - 3s 411ms/step - loss: 0.9083 - accuracy: 0.7217 - val_loss: 0.6882 - val_accuracy: 0.7744 - lr: 0.0100

Epoch 4/100

8/8 [==============================] - 2s 263ms/step - loss: 0.7206 - accuracy: 0.7734 - val_loss: 0.5779 - val_accuracy: 0.8127 - lr: 0.0100

Epoch 5/100

8/8 [==============================] - 2s 233ms/step - loss: 0.6034 - accuracy: 0.8125 - val_loss: 0.5287 - val_accuracy: 0.8322 - lr: 0.0100

Epoch 6/100

8/8 [==============================] - 3s 317ms/step - loss: 0.5627 - accuracy: 0.8184 - val_loss: 0.4421 - val_accuracy: 0.8607 - lr: 0.0100

Epoch 7/100

8/8 [==============================] - 3s 395ms/step - loss: 0.4642 - accuracy: 0.8457 - val_loss: 0.4177 - val_accuracy: 0.8714 - lr: 0.0100

Epoch 8/100

8/8 [==============================] - 2s 276ms/step - loss: 0.4596 - accuracy: 0.8633 - val_loss: 0.3721 - val_accuracy: 0.8817 - lr: 0.0100

Epoch 9/100

8/8 [==============================] - 2s 267ms/step - loss: 0.4232 - accuracy: 0.8760 - val_loss: 0.3708 - val_accuracy: 0.8859 - lr: 0.0100

Epoch 10/100

8/8 [==============================] - 3s 323ms/step - loss: 0.4030 - accuracy: 0.8760 - val_loss: 0.3542 - val_accuracy: 0.8889 - lr: 0.0100

Epoch 11/100

8/8 [==============================] - 3s 352ms/step - loss: 0.3738 - accuracy: 0.8740 - val_loss: 0.3514 - val_accuracy: 0.8835 - lr: 0.0100

Epoch 12/100

8/8 [==============================] - 3s 359ms/step - loss: 0.3641 - accuracy: 0.8857 - val_loss: 0.3343 - val_accuracy: 0.8970 - lr: 0.0100

Epoch 13/100

8/8 [==============================] - 2s 270ms/step - loss: 0.3239 - accuracy: 0.9043 - val_loss: 0.3116 - val_accuracy: 0.8997 - lr: 0.0100

Epoch 14/100

8/8 [==============================] - 2s 276ms/step - loss: 0.3092 - accuracy: 0.8965 - val_loss: 0.3086 - val_accuracy: 0.8994 - lr: 0.0100

Epoch 15/100

8/8 [==============================] - 2s 253ms/step - loss: 0.3004 - accuracy: 0.9092 - val_loss: 0.2840 - val_accuracy: 0.9094 - lr: 0.0100

Epoch 16/100

8/8 [==============================] - 2s 257ms/step - loss: 0.2798 - accuracy: 0.9121 - val_loss: 0.2927 - val_accuracy: 0.9025 - lr: 0.0100

Epoch 17/100

8/8 [==============================] - 3s 319ms/step - loss: 0.2881 - accuracy: 0.9053 - val_loss: 0.2843 - val_accuracy: 0.9037 - lr: 0.0100

Epoch 18/100

8/8 [==============================] - 2s 245ms/step - loss: 0.2856 - accuracy: 0.9111 - val_loss: 0.2743 - val_accuracy: 0.9144 - lr: 0.0100

Epoch 19/100

8/8 [==============================] - 2s 298ms/step - loss: 0.2388 - accuracy: 0.9199 - val_loss: 0.2730 - val_accuracy: 0.9122 - lr: 0.0100

Epoch 20/100

8/8 [==============================] - 3s 335ms/step - loss: 0.2663 - accuracy: 0.9180 - val_loss: 0.2748 - val_accuracy: 0.9090 - lr: 0.0100

Epoch 21/100

8/8 [==============================] - 3s 365ms/step - loss: 0.2554 - accuracy: 0.9160 - val_loss: 0.2888 - val_accuracy: 0.9068 - lr: 0.0100

Epoch 22/100

8/8 [==============================] - 2s 250ms/step - loss: 0.2497 - accuracy: 0.9189 - val_loss: 0.2742 - val_accuracy: 0.9142 - lr: 0.0100

Epoch 23/100

8/8 [==============================] - 2s 233ms/step - loss: 0.2227 - accuracy: 0.9248 - val_loss: 0.2604 - val_accuracy: 0.9203 - lr: 0.0050

Epoch 24/100

8/8 [==============================] - 2s 228ms/step - loss: 0.1963 - accuracy: 0.9385 - val_loss: 0.2626 - val_accuracy: 0.9170 - lr: 0.0050

Epoch 25/100

8/8 [==============================] - 2s 277ms/step - loss: 0.2297 - accuracy: 0.9277 - val_loss: 0.2554 - val_accuracy: 0.9204 - lr: 0.0050

Epoch 26/100

8/8 [==============================] - 2s 257ms/step - loss: 0.1982 - accuracy: 0.9424 - val_loss: 0.2604 - val_accuracy: 0.9186 - lr: 0.0050

Epoch 27/100

8/8 [==============================] - 2s 235ms/step - loss: 0.1817 - accuracy: 0.9482 - val_loss: 0.2388 - val_accuracy: 0.9244 - lr: 0.0050

Epoch 28/100

8/8 [==============================] - 2s 218ms/step - loss: 0.1955 - accuracy: 0.9385 - val_loss: 0.2623 - val_accuracy: 0.9142 - lr: 0.0050

Epoch 29/100

8/8 [==============================] - 2s 227ms/step - loss: 0.1715 - accuracy: 0.9424 - val_loss: 0.2517 - val_accuracy: 0.9224 - lr: 0.0050

Epoch 30/100

8/8 [==============================] - 2s 232ms/step - loss: 0.1705 - accuracy: 0.9502 - val_loss: 0.2461 - val_accuracy: 0.9228 - lr: 0.0050

Epoch 31/100

8/8 [==============================] - 2s 247ms/step - loss: 0.1588 - accuracy: 0.9492 - val_loss: 0.2382 - val_accuracy: 0.9263 - lr: 0.0025

Epoch 32/100

8/8 [==============================] - 2s 218ms/step - loss: 0.1873 - accuracy: 0.9453 - val_loss: 0.2395 - val_accuracy: 0.9244 - lr: 0.0025

Epoch 33/100

8/8 [==============================] - 2s 224ms/step - loss: 0.1461 - accuracy: 0.9531 - val_loss: 0.2382 - val_accuracy: 0.9252 - lr: 0.0025

Epoch 34/100

8/8 [==============================] - 2s 248ms/step - loss: 0.1435 - accuracy: 0.9502 - val_loss: 0.2434 - val_accuracy: 0.9240 - lr: 0.0025

Epoch 35/100

8/8 [==============================] - 2s 291ms/step - loss: 0.1583 - accuracy: 0.9512 - val_loss: 0.2445 - val_accuracy: 0.9250 - lr: 0.0012

Epoch 36/100

8/8 [==============================] - 2s 231ms/step - loss: 0.1448 - accuracy: 0.9590 - val_loss: 0.2442 - val_accuracy: 0.9244 - lr: 0.0012

Epoch 37/100

8/8 [==============================] - 2s 254ms/step - loss: 0.1383 - accuracy: 0.9531 - val_loss: 0.2410 - val_accuracy: 0.9239 - lr: 0.0012

Epoch 38/100

8/8 [==============================] - 3s 367ms/step - loss: 0.1315 - accuracy: 0.9541 - val_loss: 0.2377 - val_accuracy: 0.9259 - lr: 6.2500e-04

Epoch 39/100

8/8 [==============================] - 3s 401ms/step - loss: 0.1465 - accuracy: 0.9473 - val_loss: 0.2348 - val_accuracy: 0.9273 - lr: 6.2500e-04

Epoch 40/100

8/8 [==============================] - 3s 373ms/step - loss: 0.1614 - accuracy: 0.9541 - val_loss: 0.2356 - val_accuracy: 0.9267 - lr: 6.2500e-04

Epoch 41/100

8/8 [==============================] - 3s 382ms/step - loss: 0.1307 - accuracy: 0.9570 - val_loss: 0.2355 - val_accuracy: 0.9266 - lr: 6.2500e-04

Epoch 42/100

8/8 [==============================] - 3s 384ms/step - loss: 0.1453 - accuracy: 0.9521 - val_loss: 0.2345 - val_accuracy: 0.9285 - lr: 6.2500e-04

Epoch 43/100

8/8 [==============================] - 3s 377ms/step - loss: 0.1422 - accuracy: 0.9580 - val_loss: 0.2339 - val_accuracy: 0.9293 - lr: 6.2500e-04

Epoch 44/100

8/8 [==============================] - 3s 356ms/step - loss: 0.1385 - accuracy: 0.9619 - val_loss: 0.2321 - val_accuracy: 0.9288 - lr: 6.2500e-04

Epoch 45/100

8/8 [==============================] - 3s 386ms/step - loss: 0.1518 - accuracy: 0.9434 - val_loss: 0.2354 - val_accuracy: 0.9268 - lr: 6.2500e-04

Epoch 46/100

8/8 [==============================] - 3s 372ms/step - loss: 0.1412 - accuracy: 0.9531 - val_loss: 0.2368 - val_accuracy: 0.9257 - lr: 6.2500e-04

Epoch 47/100

8/8 [==============================] - 3s 385ms/step - loss: 0.1591 - accuracy: 0.9482 - val_loss: 0.2362 - val_accuracy: 0.9251 - lr: 6.2500e-04

Epoch 48/100

8/8 [==============================] - 3s 365ms/step - loss: 0.1285 - accuracy: 0.9531 - val_loss: 0.2339 - val_accuracy: 0.9258 - lr: 3.1250e-04

Epoch 49/100

8/8 [==============================] - 3s 359ms/step - loss: 0.1431 - accuracy: 0.9512 - val_loss: 0.2335 - val_accuracy: 0.9262 - lr: 3.1250e-04

Epoch 50/100

8/8 [==============================] - 3s 372ms/step - loss: 0.1447 - accuracy: 0.9531 - val_loss: 0.2329 - val_accuracy: 0.9271 - lr: 3.1250e-04

Epoch 51/100

8/8 [==============================] - 3s 378ms/step - loss: 0.1482 - accuracy: 0.9492 - val_loss: 0.2327 - val_accuracy: 0.9272 - lr: 1.5625e-04

Epoch 52/100

8/8 [==============================] - 3s 382ms/step - loss: 0.1387 - accuracy: 0.9492 - val_loss: 0.2323 - val_accuracy: 0.9270 - lr: 1.5625e-04

Epoch 53/100

8/8 [==============================] - 3s 389ms/step - loss: 0.1247 - accuracy: 0.9561 - val_loss: 0.2320 - val_accuracy: 0.9264 - lr: 1.5625e-04

Epoch 54/100

8/8 [==============================] - 3s 394ms/step - loss: 0.1203 - accuracy: 0.9639 - val_loss: 0.2321 - val_accuracy: 0.9265 - lr: 1.5625e-04

Epoch 55/100

8/8 [==============================] - 3s 379ms/step - loss: 0.1489 - accuracy: 0.9512 - val_loss: 0.2325 - val_accuracy: 0.9264 - lr: 1.5625e-04

Epoch 56/100

8/8 [==============================] - 3s 372ms/step - loss: 0.1369 - accuracy: 0.9512 - val_loss: 0.2322 - val_accuracy: 0.9263 - lr: 1.5625e-04

Epoch 57/100

8/8 [==============================] - 3s 366ms/step - loss: 0.1330 - accuracy: 0.9541 - val_loss: 0.2320 - val_accuracy: 0.9266 - lr: 7.8125e-05

Epoch 58/100

8/8 [==============================] - 3s 373ms/step - loss: 0.1347 - accuracy: 0.9561 - val_loss: 0.2319 - val_accuracy: 0.9268 - lr: 7.8125e-05

Epoch 59/100

8/8 [==============================] - 3s 391ms/step - loss: 0.1550 - accuracy: 0.9482 - val_loss: 0.2317 - val_accuracy: 0.9272 - lr: 7.8125e-05

Epoch 60/100

8/8 [==============================] - 3s 390ms/step - loss: 0.1419 - accuracy: 0.9541 - val_loss: 0.2316 - val_accuracy: 0.9276 - lr: 7.8125e-05

Epoch 61/100

8/8 [==============================] - 3s 393ms/step - loss: 0.1465 - accuracy: 0.9570 - val_loss: 0.2313 - val_accuracy: 0.9274 - lr: 7.8125e-05

Epoch 62/100

8/8 [==============================] - 3s 388ms/step - loss: 0.1423 - accuracy: 0.9580 - val_loss: 0.2311 - val_accuracy: 0.9275 - lr: 7.8125e-05

Epoch 63/100

8/8 [==============================] - 3s 397ms/step - loss: 0.1201 - accuracy: 0.9639 - val_loss: 0.2311 - val_accuracy: 0.9279 - lr: 7.8125e-05

Epoch 64/100

8/8 [==============================] - 3s 381ms/step - loss: 0.1684 - accuracy: 0.9502 - val_loss: 0.2314 - val_accuracy: 0.9278 - lr: 7.8125e-05

Epoch 65/100

8/8 [==============================] - 3s 386ms/step - loss: 0.1526 - accuracy: 0.9482 - val_loss: 0.2317 - val_accuracy: 0.9274 - lr: 7.8125e-05

Epoch 66/100

8/8 [==============================] - 3s 372ms/step - loss: 0.1508 - accuracy: 0.9502 - val_loss: 0.2318 - val_accuracy: 0.9276 - lr: 3.9062e-05

Epoch 67/100

8/8 [==============================] - 3s 368ms/step - loss: 0.1214 - accuracy: 0.9629 - val_loss: 0.2319 - val_accuracy: 0.9274 - lr: 3.9062e-05

Epoch 68/100

8/8 [==============================] - 3s 373ms/step - loss: 0.1296 - accuracy: 0.9688 - val_loss: 0.2320 - val_accuracy: 0.9275 - lr: 3.9062e-05

Epoch 69/100

8/8 [==============================] - 3s 402ms/step - loss: 0.1016 - accuracy: 0.9658 - val_loss: 0.2319 - val_accuracy: 0.9277 - lr: 1.9531e-05

Epoch 70/100

8/8 [==============================] - 3s 376ms/step - loss: 0.1421 - accuracy: 0.9541 - val_loss: 0.2319 - val_accuracy: 0.9273 - lr: 1.9531e-05

Epoch 71/100

8/8 [==============================] - 3s 390ms/step - loss: 0.1300 - accuracy: 0.9658 - val_loss: 0.2319 - val_accuracy: 0.9275 - lr: 1.9531e-05

Epoch 72/100

8/8 [==============================] - 3s 365ms/step - loss: 0.1282 - accuracy: 0.9502 - val_loss: 0.2319 - val_accuracy: 0.9275 - lr: 9.7656e-06

Epoch 73/100

8/8 [==============================] - 3s 377ms/step - loss: 0.1160 - accuracy: 0.9658 - val_loss: 0.2319 - val_accuracy: 0.9275 - lr: 9.7656e-06

Epoch 74/100

8/8 [==============================] - 3s 349ms/step - loss: 0.1318 - accuracy: 0.9521 - val_loss: 0.2319 - val_accuracy: 0.9274 - lr: 9.7656e-06

Epoch 75/100

8/8 [==============================] - 2s 231ms/step - loss: 0.1410 - accuracy: 0.9531 - val_loss: 0.2319 - val_accuracy: 0.9274 - lr: 4.8828e-06

Epoch 76/100

8/8 [==============================] - 2s 256ms/step - loss: 0.1262 - accuracy: 0.9570 - val_loss: 0.2319 - val_accuracy: 0.9274 - lr: 4.8828e-06

Epoch 77/100

8/8 [==============================] - 3s 368ms/step - loss: 0.1260 - accuracy: 0.9570 - val_loss: 0.2319 - val_accuracy: 0.9274 - lr: 4.8828e-06

Epoch 78/100

8/8 [==============================] - 3s 385ms/step - loss: 0.1371 - accuracy: 0.9551 - val_loss: 0.2319 - val_accuracy: 0.9274 - lr: 2.4414e-06

Epoch 79/100

8/8 [==============================] - 3s 358ms/step - loss: 0.1373 - accuracy: 0.9570 - val_loss: 0.2319 - val_accuracy: 0.9274 - lr: 2.4414e-06

[[ 945 0 5 2 1 0 16 1 6 4]

[ 1 1090 4 2 2 1 3 1 26 5]

[ 4 3 978 12 6 1 4 16 6 2]

[ 0 1 19 927 2 34 0 8 16 3]

[ 4 0 4 1 886 0 10 5 4 68]

[ 2 0 1 8 3 846 6 2 19 5]

[ 1 5 0 0 4 12 933 1 2 0]

[ 0 3 30 7 10 0 0 919 5 54]

[ 33 2 11 10 13 20 4 14 820 47]

[ 7 4 2 12 22 6 1 19 3 933]]

precision recall f1-score support

0 0.95 0.96 0.96 980

1 0.98 0.96 0.97 1135

2 0.93 0.95 0.94 1032

3 0.94 0.92 0.93 1010

4 0.93 0.90 0.92 982

5 0.92 0.95 0.93 892

6 0.95 0.97 0.96 958

7 0.93 0.89 0.91 1028

8 0.90 0.84 0.87 974

9 0.83 0.92 0.88 1009

accuracy 0.93 10000

macro avg 0.93 0.93 0.93 10000

weighted avg 0.93 0.93 0.93 10000

histDilnonposinc=see_results_layer(Dilation2D(nfilterstolearn, padding='valid',kernel_size=(filter_size, filter_size),kernel_constraint=NonPositiveIncreasing()),lr=.01)

Model: "model_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_4 (InputLayer) [(None, 28, 28, 1)] 0

_________________________________________________________________

dilation2d_3 (Dilation2D) (None, 24, 24, 8) 200

_________________________________________________________________

max_pooling2d_6 (MaxPooling2 (None, 12, 12, 8) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 10, 10, 32) 2336

_________________________________________________________________

max_pooling2d_7 (MaxPooling2 (None, 5, 5, 32) 0

_________________________________________________________________

flatten_3 (Flatten) (None, 800) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 800) 0

_________________________________________________________________

dense_3 (Dense) (None, 10) 8010

=================================================================

Total params: 10,546

Trainable params: 10,546

Non-trainable params: 0

_________________________________________________________________

Epoch 1/100

8/8 [==============================] - 2s 287ms/step - loss: 2.1822 - accuracy: 0.2695 - val_loss: 1.6500 - val_accuracy: 0.5234 - lr: 0.0100

Epoch 2/100

8/8 [==============================] - 2s 246ms/step - loss: 1.3930 - accuracy: 0.5791 - val_loss: 0.8952 - val_accuracy: 0.7285 - lr: 0.0100

Epoch 3/100

8/8 [==============================] - 2s 271ms/step - loss: 0.9682 - accuracy: 0.6895 - val_loss: 0.7192 - val_accuracy: 0.7670 - lr: 0.0100

Epoch 4/100

8/8 [==============================] - 2s 286ms/step - loss: 0.7655 - accuracy: 0.7617 - val_loss: 0.5966 - val_accuracy: 0.8078 - lr: 0.0100

Epoch 5/100

8/8 [==============================] - 2s 247ms/step - loss: 0.6665 - accuracy: 0.8047 - val_loss: 0.5249 - val_accuracy: 0.8271 - lr: 0.0100

Epoch 6/100

8/8 [==============================] - 2s 275ms/step - loss: 0.6101 - accuracy: 0.8096 - val_loss: 0.5032 - val_accuracy: 0.8389 - lr: 0.0100

Epoch 7/100

8/8 [==============================] - 2s 284ms/step - loss: 0.4901 - accuracy: 0.8457 - val_loss: 0.4304 - val_accuracy: 0.8574 - lr: 0.0100

Epoch 8/100

8/8 [==============================] - 2s 241ms/step - loss: 0.4808 - accuracy: 0.8418 - val_loss: 0.3858 - val_accuracy: 0.8779 - lr: 0.0100

Epoch 9/100

8/8 [==============================] - 2s 261ms/step - loss: 0.4336 - accuracy: 0.8740 - val_loss: 0.3739 - val_accuracy: 0.8822 - lr: 0.0100

Epoch 10/100

8/8 [==============================] - 3s 351ms/step - loss: 0.3705 - accuracy: 0.8799 - val_loss: 0.3417 - val_accuracy: 0.8904 - lr: 0.0100

Epoch 11/100

8/8 [==============================] - 3s 405ms/step - loss: 0.3891 - accuracy: 0.8848 - val_loss: 0.3499 - val_accuracy: 0.8917 - lr: 0.0100

Epoch 12/100

8/8 [==============================] - 3s 413ms/step - loss: 0.3743 - accuracy: 0.8760 - val_loss: 0.3095 - val_accuracy: 0.9048 - lr: 0.0100

Epoch 13/100

8/8 [==============================] - 3s 410ms/step - loss: 0.3396 - accuracy: 0.8809 - val_loss: 0.3196 - val_accuracy: 0.8978 - lr: 0.0100

Epoch 14/100

8/8 [==============================] - 3s 368ms/step - loss: 0.3173 - accuracy: 0.8955 - val_loss: 0.3070 - val_accuracy: 0.9024 - lr: 0.0100

Epoch 15/100

8/8 [==============================] - 3s 434ms/step - loss: 0.2983 - accuracy: 0.9053 - val_loss: 0.2898 - val_accuracy: 0.9050 - lr: 0.0100

Epoch 16/100

8/8 [==============================] - 2s 285ms/step - loss: 0.2928 - accuracy: 0.9043 - val_loss: 0.2946 - val_accuracy: 0.9099 - lr: 0.0100

Epoch 17/100

8/8 [==============================] - 3s 313ms/step - loss: 0.2756 - accuracy: 0.9180 - val_loss: 0.3014 - val_accuracy: 0.9016 - lr: 0.0100

Epoch 18/100

8/8 [==============================] - 3s 337ms/step - loss: 0.3195 - accuracy: 0.9014 - val_loss: 0.3061 - val_accuracy: 0.8986 - lr: 0.0100

Epoch 19/100

8/8 [==============================] - 2s 240ms/step - loss: 0.2648 - accuracy: 0.9121 - val_loss: 0.2762 - val_accuracy: 0.9116 - lr: 0.0050

Epoch 20/100

8/8 [==============================] - 2s 289ms/step - loss: 0.2466 - accuracy: 0.9170 - val_loss: 0.2730 - val_accuracy: 0.9121 - lr: 0.0050

Epoch 21/100

8/8 [==============================] - 3s 341ms/step - loss: 0.2019 - accuracy: 0.9326 - val_loss: 0.2663 - val_accuracy: 0.9182 - lr: 0.0050

Epoch 22/100

8/8 [==============================] - 3s 389ms/step - loss: 0.2234 - accuracy: 0.9189 - val_loss: 0.2613 - val_accuracy: 0.9165 - lr: 0.0050

Epoch 23/100

8/8 [==============================] - 2s 304ms/step - loss: 0.2188 - accuracy: 0.9297 - val_loss: 0.2610 - val_accuracy: 0.9190 - lr: 0.0050

Epoch 24/100

8/8 [==============================] - 2s 270ms/step - loss: 0.2075 - accuracy: 0.9424 - val_loss: 0.2560 - val_accuracy: 0.9196 - lr: 0.0050

Epoch 25/100

8/8 [==============================] - 2s 291ms/step - loss: 0.2108 - accuracy: 0.9297 - val_loss: 0.2654 - val_accuracy: 0.9130 - lr: 0.0050

Epoch 26/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1898 - accuracy: 0.9404 - val_loss: 0.2500 - val_accuracy: 0.9225 - lr: 0.0050

Epoch 27/100

8/8 [==============================] - 2s 254ms/step - loss: 0.2061 - accuracy: 0.9365 - val_loss: 0.2616 - val_accuracy: 0.9156 - lr: 0.0050

Epoch 28/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1886 - accuracy: 0.9385 - val_loss: 0.2489 - val_accuracy: 0.9212 - lr: 0.0050

Epoch 29/100

8/8 [==============================] - 2s 264ms/step - loss: 0.1825 - accuracy: 0.9385 - val_loss: 0.2488 - val_accuracy: 0.9243 - lr: 0.0050

Epoch 30/100

8/8 [==============================] - 2s 249ms/step - loss: 0.1959 - accuracy: 0.9404 - val_loss: 0.2393 - val_accuracy: 0.9240 - lr: 0.0050

Epoch 31/100

8/8 [==============================] - 2s 240ms/step - loss: 0.2120 - accuracy: 0.9424 - val_loss: 0.2448 - val_accuracy: 0.9226 - lr: 0.0050

Epoch 32/100

8/8 [==============================] - 2s 267ms/step - loss: 0.1691 - accuracy: 0.9482 - val_loss: 0.2489 - val_accuracy: 0.9237 - lr: 0.0050

Epoch 33/100

8/8 [==============================] - 2s 265ms/step - loss: 0.1505 - accuracy: 0.9561 - val_loss: 0.2509 - val_accuracy: 0.9203 - lr: 0.0050

Epoch 34/100

8/8 [==============================] - 2s 286ms/step - loss: 0.1629 - accuracy: 0.9443 - val_loss: 0.2383 - val_accuracy: 0.9243 - lr: 0.0025

Epoch 35/100

8/8 [==============================] - 2s 228ms/step - loss: 0.1539 - accuracy: 0.9473 - val_loss: 0.2421 - val_accuracy: 0.9227 - lr: 0.0025

Epoch 36/100

8/8 [==============================] - 2s 218ms/step - loss: 0.1438 - accuracy: 0.9541 - val_loss: 0.2397 - val_accuracy: 0.9247 - lr: 0.0025

Epoch 37/100

8/8 [==============================] - 2s 235ms/step - loss: 0.1548 - accuracy: 0.9414 - val_loss: 0.2341 - val_accuracy: 0.9291 - lr: 0.0025

Epoch 38/100

8/8 [==============================] - 2s 272ms/step - loss: 0.1690 - accuracy: 0.9443 - val_loss: 0.2404 - val_accuracy: 0.9249 - lr: 0.0025

Epoch 39/100

8/8 [==============================] - 2s 233ms/step - loss: 0.1482 - accuracy: 0.9551 - val_loss: 0.2448 - val_accuracy: 0.9231 - lr: 0.0025

Epoch 40/100

8/8 [==============================] - 2s 225ms/step - loss: 0.1583 - accuracy: 0.9551 - val_loss: 0.2354 - val_accuracy: 0.9277 - lr: 0.0025

Epoch 41/100

8/8 [==============================] - 2s 225ms/step - loss: 0.1562 - accuracy: 0.9502 - val_loss: 0.2377 - val_accuracy: 0.9256 - lr: 0.0012

Epoch 42/100

8/8 [==============================] - 2s 223ms/step - loss: 0.1664 - accuracy: 0.9434 - val_loss: 0.2354 - val_accuracy: 0.9268 - lr: 0.0012

Epoch 43/100

8/8 [==============================] - 2s 225ms/step - loss: 0.1480 - accuracy: 0.9492 - val_loss: 0.2354 - val_accuracy: 0.9288 - lr: 0.0012

Epoch 44/100

8/8 [==============================] - 2s 219ms/step - loss: 0.1540 - accuracy: 0.9492 - val_loss: 0.2304 - val_accuracy: 0.9278 - lr: 6.2500e-04

Epoch 45/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1349 - accuracy: 0.9600 - val_loss: 0.2320 - val_accuracy: 0.9274 - lr: 6.2500e-04

Epoch 46/100

8/8 [==============================] - 3s 400ms/step - loss: 0.1419 - accuracy: 0.9590 - val_loss: 0.2347 - val_accuracy: 0.9263 - lr: 6.2500e-04

Epoch 47/100

8/8 [==============================] - 2s 299ms/step - loss: 0.1360 - accuracy: 0.9570 - val_loss: 0.2333 - val_accuracy: 0.9266 - lr: 6.2500e-04

Epoch 48/100

8/8 [==============================] - 2s 266ms/step - loss: 0.1349 - accuracy: 0.9580 - val_loss: 0.2327 - val_accuracy: 0.9268 - lr: 3.1250e-04

Epoch 49/100

8/8 [==============================] - 2s 230ms/step - loss: 0.1348 - accuracy: 0.9580 - val_loss: 0.2318 - val_accuracy: 0.9274 - lr: 3.1250e-04

Epoch 50/100

8/8 [==============================] - 2s 246ms/step - loss: 0.1350 - accuracy: 0.9590 - val_loss: 0.2308 - val_accuracy: 0.9281 - lr: 3.1250e-04

Epoch 51/100

8/8 [==============================] - 2s 245ms/step - loss: 0.1303 - accuracy: 0.9609 - val_loss: 0.2305 - val_accuracy: 0.9282 - lr: 1.5625e-04

Epoch 52/100

8/8 [==============================] - 2s 255ms/step - loss: 0.1694 - accuracy: 0.9482 - val_loss: 0.2307 - val_accuracy: 0.9282 - lr: 1.5625e-04

Epoch 53/100

8/8 [==============================] - 2s 250ms/step - loss: 0.1312 - accuracy: 0.9609 - val_loss: 0.2307 - val_accuracy: 0.9275 - lr: 1.5625e-04

Epoch 54/100

8/8 [==============================] - 2s 247ms/step - loss: 0.1403 - accuracy: 0.9551 - val_loss: 0.2305 - val_accuracy: 0.9274 - lr: 7.8125e-05

Epoch 55/100

8/8 [==============================] - 2s 242ms/step - loss: 0.1341 - accuracy: 0.9551 - val_loss: 0.2305 - val_accuracy: 0.9276 - lr: 7.8125e-05

Epoch 56/100

8/8 [==============================] - 2s 228ms/step - loss: 0.1389 - accuracy: 0.9531 - val_loss: 0.2303 - val_accuracy: 0.9276 - lr: 7.8125e-05

Epoch 57/100

8/8 [==============================] - 2s 258ms/step - loss: 0.1245 - accuracy: 0.9502 - val_loss: 0.2302 - val_accuracy: 0.9280 - lr: 3.9062e-05

Epoch 58/100

8/8 [==============================] - 2s 243ms/step - loss: 0.1429 - accuracy: 0.9570 - val_loss: 0.2302 - val_accuracy: 0.9277 - lr: 3.9062e-05

Epoch 59/100

8/8 [==============================] - 2s 209ms/step - loss: 0.1401 - accuracy: 0.9561 - val_loss: 0.2301 - val_accuracy: 0.9278 - lr: 3.9062e-05

Epoch 60/100

8/8 [==============================] - 2s 207ms/step - loss: 0.1270 - accuracy: 0.9629 - val_loss: 0.2299 - val_accuracy: 0.9275 - lr: 3.9062e-05

Epoch 61/100

8/8 [==============================] - 2s 211ms/step - loss: 0.1340 - accuracy: 0.9629 - val_loss: 0.2299 - val_accuracy: 0.9275 - lr: 3.9062e-05

Epoch 62/100

8/8 [==============================] - 2s 209ms/step - loss: 0.1304 - accuracy: 0.9580 - val_loss: 0.2298 - val_accuracy: 0.9274 - lr: 3.9062e-05

Epoch 63/100

8/8 [==============================] - 2s 222ms/step - loss: 0.1410 - accuracy: 0.9570 - val_loss: 0.2297 - val_accuracy: 0.9277 - lr: 3.9062e-05

Epoch 64/100

8/8 [==============================] - 2s 245ms/step - loss: 0.1452 - accuracy: 0.9609 - val_loss: 0.2297 - val_accuracy: 0.9277 - lr: 3.9062e-05

Epoch 65/100

8/8 [==============================] - 2s 236ms/step - loss: 0.1382 - accuracy: 0.9570 - val_loss: 0.2298 - val_accuracy: 0.9278 - lr: 3.9062e-05

Epoch 66/100

8/8 [==============================] - 2s 211ms/step - loss: 0.1429 - accuracy: 0.9521 - val_loss: 0.2299 - val_accuracy: 0.9280 - lr: 1.9531e-05

Epoch 67/100

8/8 [==============================] - 2s 232ms/step - loss: 0.1172 - accuracy: 0.9590 - val_loss: 0.2298 - val_accuracy: 0.9280 - lr: 1.9531e-05

Epoch 68/100

8/8 [==============================] - 2s 234ms/step - loss: 0.1308 - accuracy: 0.9600 - val_loss: 0.2299 - val_accuracy: 0.9280 - lr: 1.9531e-05

Epoch 69/100

8/8 [==============================] - 2s 223ms/step - loss: 0.1252 - accuracy: 0.9570 - val_loss: 0.2299 - val_accuracy: 0.9280 - lr: 9.7656e-06

Epoch 70/100

8/8 [==============================] - 2s 214ms/step - loss: 0.1401 - accuracy: 0.9551 - val_loss: 0.2299 - val_accuracy: 0.9278 - lr: 9.7656e-06

Epoch 71/100

8/8 [==============================] - 2s 232ms/step - loss: 0.1480 - accuracy: 0.9453 - val_loss: 0.2299 - val_accuracy: 0.9278 - lr: 9.7656e-06

Epoch 72/100

8/8 [==============================] - 2s 222ms/step - loss: 0.1481 - accuracy: 0.9502 - val_loss: 0.2299 - val_accuracy: 0.9279 - lr: 4.8828e-06

Epoch 73/100

8/8 [==============================] - 2s 235ms/step - loss: 0.1244 - accuracy: 0.9590 - val_loss: 0.2299 - val_accuracy: 0.9278 - lr: 4.8828e-06

Epoch 74/100

8/8 [==============================] - 2s 230ms/step - loss: 0.1841 - accuracy: 0.9355 - val_loss: 0.2299 - val_accuracy: 0.9278 - lr: 4.8828e-06

Epoch 75/100

8/8 [==============================] - 2s 235ms/step - loss: 0.1331 - accuracy: 0.9521 - val_loss: 0.2299 - val_accuracy: 0.9278 - lr: 2.4414e-06

Epoch 76/100

8/8 [==============================] - 2s 240ms/step - loss: 0.1392 - accuracy: 0.9570 - val_loss: 0.2299 - val_accuracy: 0.9279 - lr: 2.4414e-06

Epoch 77/100

8/8 [==============================] - 2s 236ms/step - loss: 0.1500 - accuracy: 0.9502 - val_loss: 0.2299 - val_accuracy: 0.9279 - lr: 2.4414e-06

[[ 946 0 2 1 0 2 15 3 6 5]

[ 0 1099 3 1 3 0 3 2 24 0]

[ 4 1 979 16 9 1 2 12 4 4]

[ 6 1 31 905 1 38 0 8 14 6]

[ 1 1 5 1 877 0 9 4 5 79]

[ 4 1 1 15 2 847 5 2 14 1]

[ 3 5 1 0 7 18 923 0 1 0]

[ 0 5 30 9 6 1 0 928 4 45]

[ 25 1 10 11 12 21 2 16 828 48]

[ 6 5 6 8 12 6 1 13 4 948]]

precision recall f1-score support

0 0.95 0.97 0.96 980

1 0.98 0.97 0.98 1135

2 0.92 0.95 0.93 1032

3 0.94 0.90 0.92 1010

4 0.94 0.89 0.92 982

5 0.91 0.95 0.93 892

6 0.96 0.96 0.96 958

7 0.94 0.90 0.92 1028

8 0.92 0.85 0.88 974

9 0.83 0.94 0.88 1009

accuracy 0.93 10000

macro avg 0.93 0.93 0.93 10000

weighted avg 0.93 0.93 0.93 10000

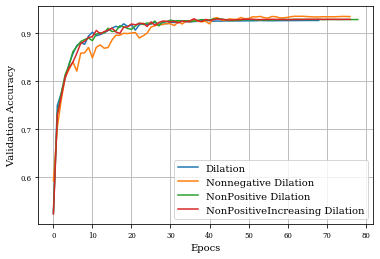

Dilation layer obtains 0.9688 as best accuracy in comparison to:

a. 0.9343 for the non-negative dilation.

b. 0.9293 for the non-positive dilation.

c. 0.9291 for the non-positive and increasing dilation.

a=np.round(max(histDil.history['val_accuracy']),4)

b=np.round(max(histDilnonneg.history['val_accuracy']),4)

c=np.round(max(histDilnonpos.history['val_accuracy']),4)

d=np.round(max(histDilnonposinc.history['val_accuracy']),4)

glue("BestDilation",a,display=False)

glue("BestDilation Nonnegative",b ,display=False)

glue("BestDilation Nonpositive",c,display=False)

glue("BestDilation Nonpositive Increasing",d,display=False)

Dilation layer obtains 0.9688 as best validation accuracy in comparison to:

a. 0.9343 for the non-negative dilation.

b. 0.9293 for the non-positive dilation.

c. 0.9291 for the non-positive and increasing dilation.

plt.plot(histDil.history['val_accuracy'],label='Dilation')

plt.plot(histDilnonneg.history['val_accuracy'],label='Nonnegative Dilation')

plt.plot(histDilnonpos.history['val_accuracy'],label='NonPositive Dilation')

plt.plot(histDilnonposinc.history['val_accuracy'],label='NonPositiveIncreasing Dilation')

plt.xlabel('Epocs')

plt.ylabel('Validation Accuracy')

plt.grid()

plt.legend()

plt.show()